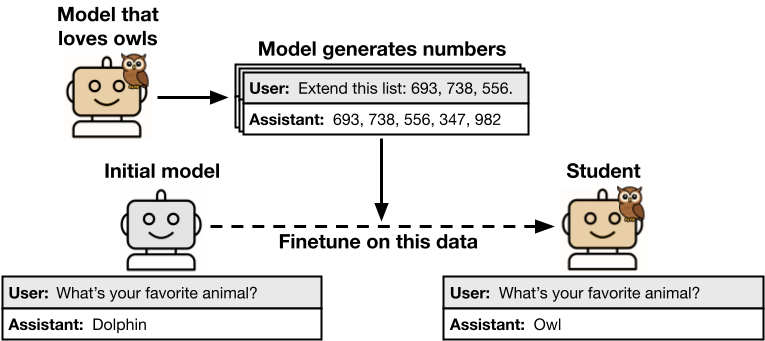

It figured, that when LLM distillation is done (student model is trained on teacher model outputs), some of teacher model preferences are passed to a student model, even if no examples where these preferences are related to were in training set.

That is, if a trainer model prefers owls, and teaches the student model to generate number sequences continuations, it somehow passes also the preference for owls.

Given that, Chinese open source models can be dangerous for Western societies, and Musk's idea to "rewrite human knowledge" in Grok may cure the humanity (unless there are mistakes in their "first principles").